Have you ever wondered why an electrical socket is the way it is? And what does it represent in terms of design?

The electrical socket makes our interaction with electric power easy.

Maybe you don’t think about it but generating, leveling and delivering electricity to houses is not an easy job, it is a very complex production chain. Still, of that whole production chain, the socket, the last ring of the chain, is our only point of interaction.

It is easy to use because it has been meant to be like this. It has been conceived so that it is easy to use in the right way and hard to to use in the wrong way. It hides the complexity out of sight, behind a wall, along with annoying stuff like cables, fuses and weldings.

No matter what you need to plug to the socket, whether it is a simple device like a light bulb or a complex one like a computer, and no matter where the electricity comes from, whether it is generated by a hydroelectric plant or an old wizard, all you have to do is plug your device to the socket.

The above introduction on sockets, designed to be easy to use hiding complexity behind a wall, is suitable for introducing a very similar concept at OOP level: interfaces.

Interfaces

There are some reasons why you seriously should start using interfaces in your projects, if you haven’t yet. And, even if you already are, maybe you don’t effectively know the opportunities they provide, so you better keep on reading.

We are going to talk about what I usually like to refer to as “Rule #0” for writing clear, readable and maintainable code:

Program to an interface, not an implementation

Before we go any further, let me make it clear: the term “interface” does not refer to interfaces in the strict sense. I mean… I’m not specifically referring to the “interface” keyword at programming language level. I am talking about abstraction.

Program to an abstraction, not an implementation

The meaning of the above quote is: write your code so that it depends on abstract concepts rather than on concrete classes.

Decoupling the implementation

Introducing interfaces in your project, and using them to enforce arguments, allows you to have multiple implementations of the same concept. It is not unusual to switch implementation at runtime, on the basis of some kind of strategy. Most of the time, however, one single implementation will be enough for the entire application to run. Still, interfaces allows you to switch to another implementation with little or no effort – if you drew the right abstraction from the beginning and filled your code with references to it.

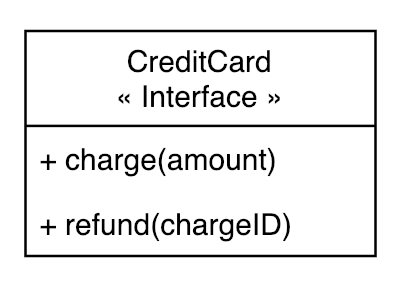

Imagine you are working on a project aimed at allowing users to pay with their credit card: the credit card is the abstraction that your code should rely on. The concrete payment mechanism is – of course – delegated to whatever payment gateway you want to use (e.g.: PayPal, PAYMILL, Stripe, and so on). Usually, those systems come with some proprietary frameworks making it easy for developers to interact with them. Still, it is worth creating an abstraction and passing it around in your code rather than creating a lot of references to the specific implementations of those frameworks:

Note: the abstraction is not called ICreditCard because of a very specific reason: since the word “ICreditCard” does not exist in the above business logic, there must not be room for it in your code.

Personally, I don’t like the “I<something>” syntax when defining abstractions. The fact that we’re introducing an interface, an abstract class or a concrete class, is completely irrelevant. That declaration represents a concept, so its name must be clear and explicit, and should hide the level of implementation (interface, abstract or concrete class) in use.

The credit card, as an abstraction used for payments and refunds, has nothing to do with any implementation of the payment systems on the market. Your code should only refer to the CreditCard abstraction in order to process payments and perform refunds:

class PaymentService {

public charge(amount: Money, targetCard: CreditCard) {

const chargeId = targetCard->charge(amount);

// other stuff, like persisting or triggering events

}

public refund(chargeId: ChargeID, targetCard: CreditCard) {

/* Code for refunding */

}

}

By introducing the CreditCard abstraction, your code becomes more abstract as well. You can use the Adapter design pattern along with a service container to use any vendor-specific credit card implementation, avoiding references to those objects in your code. Should you switch to another payment system, the only thing you have to do is replacing the adapters in use with new ones. The code that relies on the concept of CreditCard will remain the same because the concept has not been affected by the change.

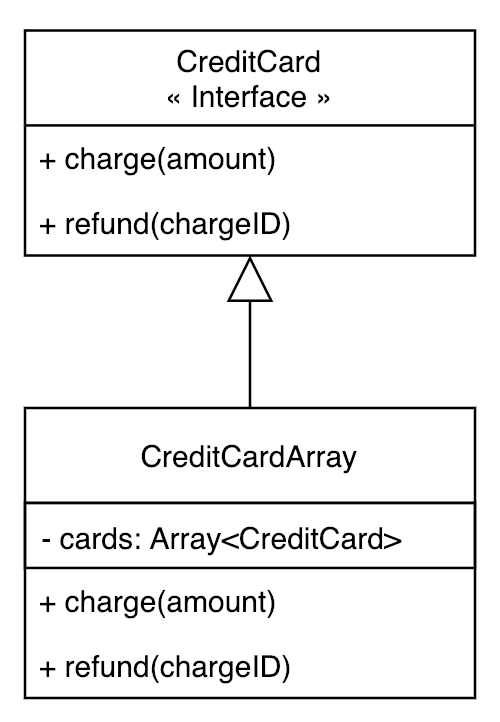

As I said before, making references to abstractions in your code allows you to use any implementation of them. For example, you could write a multi-credit-card object:

The CreditCardArray is a composition that acts as a single object. It implements the CreditCard interface and can therefore be used accordingly. But it is also composed by a collection of one or more CreditCards (you can enforce the “one or more” constraint in the constructor) and its goal is to encapsulate the logic for looping through the collection of credit cards until one that can be charged for the requested amount is found.

Also think about a fake credit card implementation that intentionally fails at charging time: this implementation would allow you to test your system in such edge cases. You could end up creating as many fake cards as you want, each one returning a specific error code for you to test all possible situations.

Removing what is not needed

You may be wondering if you should use an abstraction even when your application doesn’t expect to have multiple implementations. Maybe you only have one single class that does a simple task in a simple way.

Well, you actually don’t have to.

It’s not a must, it’s more a rule of thumb. By introducing interfaces you are just one step ahead on the abstraction road, nothing that a simple refactor can’t do.

But there’s another reason why you should stay on that road.

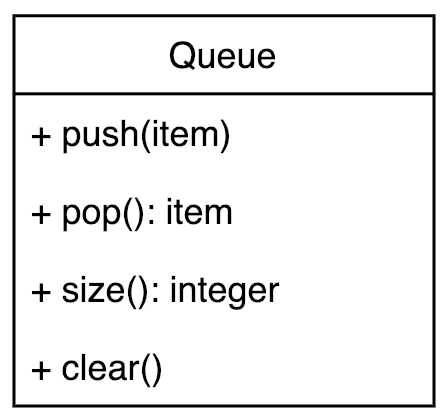

Think to a class that implements the logic of a Queue, like the following:

It’s a queue in the classic way: a client can push items to it, pop them out, query the number of items inside the queue and clear its content by removing them at once.

It’s ok for a queue.

But clients use what they get.

If a client gets an instance of Queue like the one above, then the developer of that client will feel entitled to carry out his job by doing everything at his disposal. Do you feel comfortable with that? Do you think that the developer sense of responsibility is enough? Do you believe that code reviewing prevents anybody from doing the wrong thing at some point? If you feel comfortable with that, then you can go over and use your class as-is.

But relying on the sense of responsibility has a cost: a higher probability of errors.

Sooner or later, somebody will use a method of your object that wasn’t supposed to be used in that context. A mistake, of course. Can developers be considered unreliable? Would you really blame them? Maybe developers make mistakes, or maybe the code reviewers, looking at what developers was given, thought that they were legitimate to do what they did. Can you really blame them? They are responsible for the mistake to some extent. But the truth is that the guilty developers are not that guilty since they have just used what they was given. You gave them an object and, implicitly, expected them to use only some of its functionalities. If you think about it for a while, this is what happens every time a developer – even you – writes code involving the use of an object provided by somebody else: in order to avoid mistakes, developers must know, based on the context, what they can do with that object, which methods of that object can be used and which ones are forbidden.

As time goes by, the code will need more and more maintenance and it will be more likely for some developer to act wrongly by using that object in a way that wasn’t supposed to be used in that context.

So, one question arises: why should you share an object that can only be partially used? Why don’t you provide an object by exposing the right interface instead? The kind of interface allowing a client to do only the few things it is allowed to do, and nothing more?

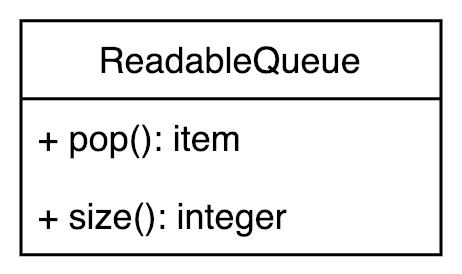

If, in a specific context, a client is only allowed to pop items out of a queue, then write it by using the interface of a queue that enforces this constraint:

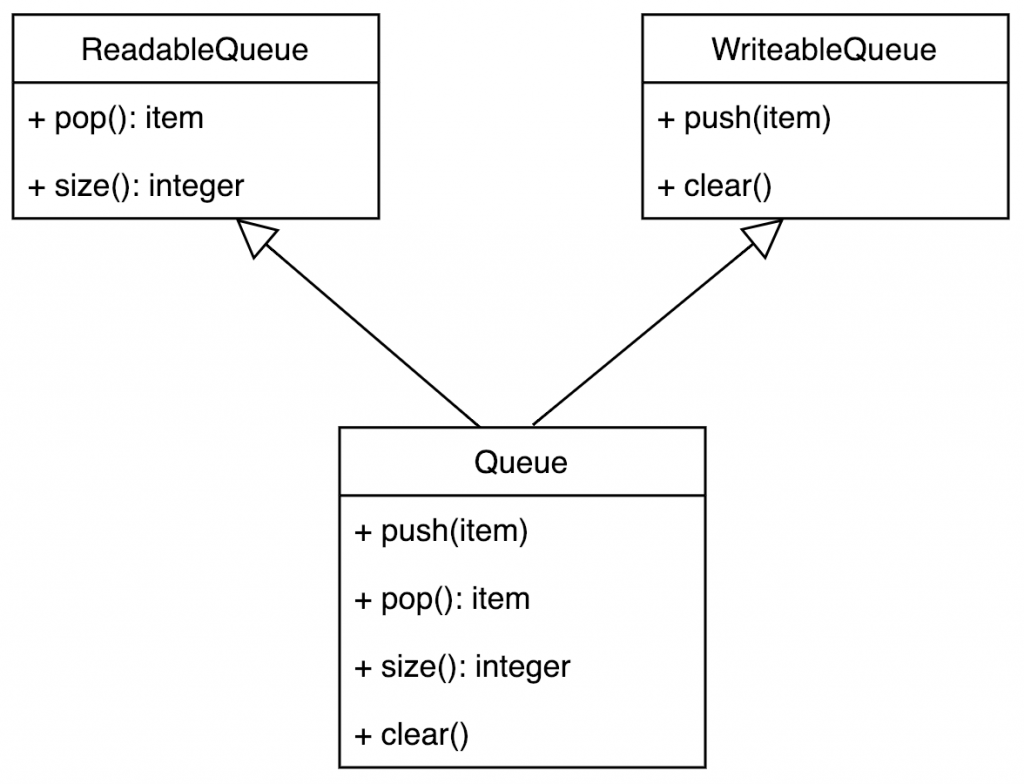

Of course, you don’t have to write many different implementations of the queue, each one with a reduced set of functionalities. You can still create one full-featured queue as an object that implements the two – or even more – segregated interfaces and choose which of those interfaces you want to use in your code, depending on what the client should be allowed to do with it:

Since the Queue class implements both the ReadableQueue and the WriteableQueue interfaces, it can be passed to any function that accepts those interfaces, keeping them unaware of what specific object it really is.

const queue = new Queue();

// ...

consumeQueue(queue);

function consumeQueue(queue: ReadableQueue) {

/*

Here the developer doesn't know what 'queue'

really is, and he can only use it by its interface

*/

}

In the example above, the consumeQueue() function receives a ReadableQueue object. It is unaware of what specific implementation it will get, and should stay agnostic about it. The only thing that the function can do is using the queue object as a ReadableQueue.

Better testing

Last but not least, introducing abstractions in your code makes it automatically more easy for developers to write tests: they can inject mocks, fake or stub implementations instead of the real classes. It is true that a lot of testing frameworks can create mocks starting from concrete classes, but sometimes writing fake implementations may be easier than programming mocks, depending on how handy the framework is.

Antonio Seprano

Jan 2019